The unintended effects of graph-based ML applications

Talk to the City is first and foremost a public deliberations ingestion, analysis and publishing platform.

However in this writeup, we will visit some of the unintended side-effects of Talk to the City we discovered whilst trying out new connections for the sake of exploration. All the use cases you are about to see were never requested or explicitly built. Rather they are the result of connecting nodes together over the holidays, and seeing what happens.

Let's begin with a new node: webpage_v0. It takes in a URL, fetches the webpage, processes it using the readability.js library, and outputs the plain text.

As we can see above, a node on its own isn't really useful. So let's add another node: the markdown_v0 node. This node takes in a string, or a CSV, passes its contents through the marked library, and displays the result as sanitized, rendered HTML.

This is quite useful, as display nodes like markdown_v0 serve as good sanity checks, to verify our data is indeed correct.

Let's try something a bit more useful this time, by connecting the webpage into a count_tokens_v0 node.

We can now definitively know whether the page fits in a model's context.

At this point however, it is worth contemplating that being able to display the contents of a webpage, or count tokens for a webpage was never explicitely programmed into talk to the city. This may seem like a moot point we are making for the sake of narrative: but it isn't. Our only focus has been public deliberations, and the nodes you are seeing here (except for count_tokens_v0 and markdown_v0) were only introduced on the same week as we are writing this article.

What's more, we could randomly select nodes, try connecting them to one another, and keep on writing these kinds of articles ad-infinitum. (Eventually, we are hoping to make these types of articles LLM-generated, with the interactive examples too.).

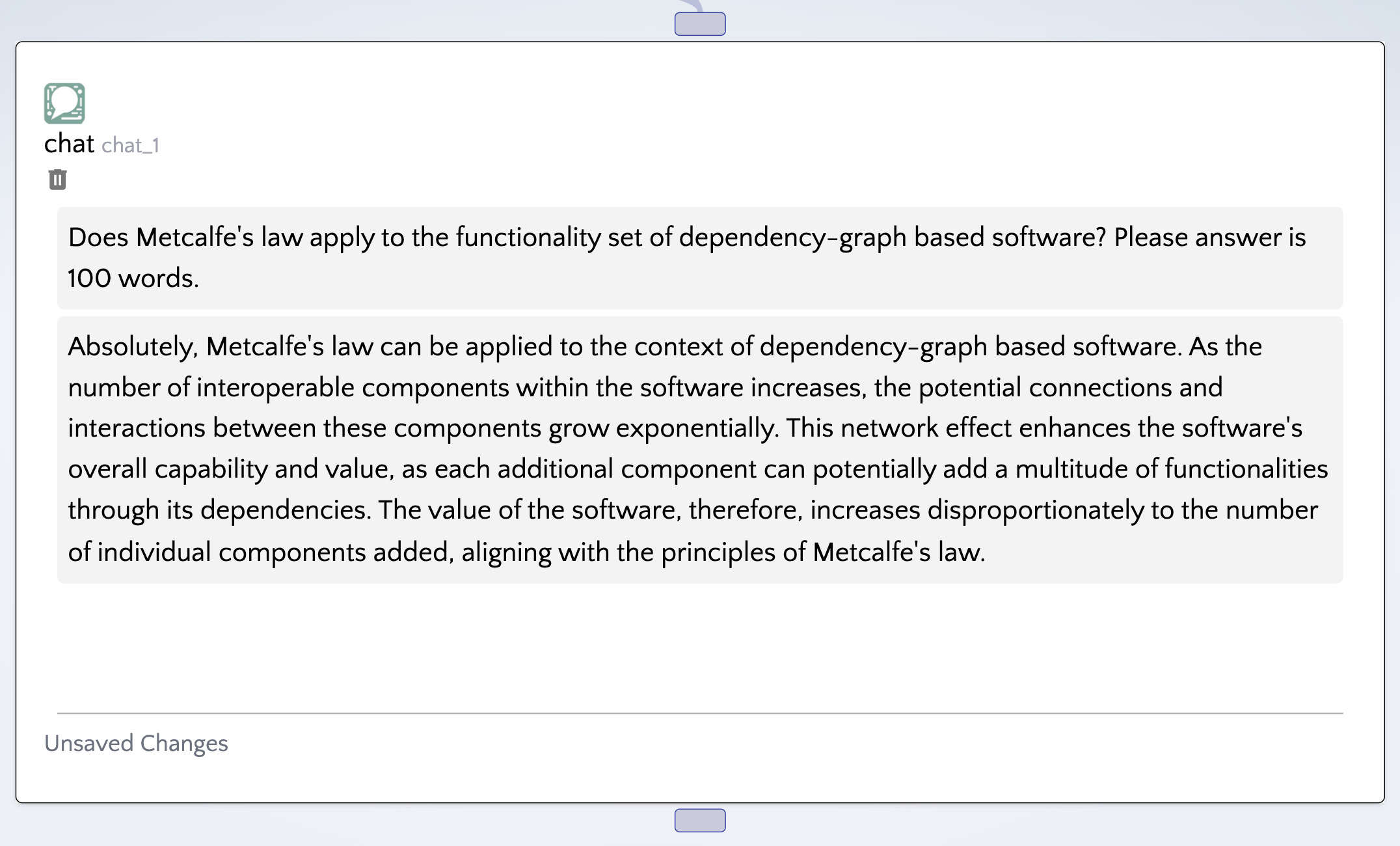

What other interesting side effects can we get from connecting nodes? Let's connect our webpage_v0 node to a chat_v0 node. I invite you to:

- paste your OpenAI key

- paste any website in the webpage node

- run the pipeline (robot icon)

- type the question "...........? Please answer in 200 words." and press enter

Here's a question we asked:

This is in line with what we are experiencing at this very early stage in Talk to the City Turbo's lifecycle (2 months since inception): the introduction of certain nodes can lead to an explosion in functionality that is far greater than the functionality of that component alone.

(It is worth noting that functionality won't keep on growing exponentially forever, as practical constraints and diminishing returns may at some point start settling in.).

Is Chatting with a Webpage useful?

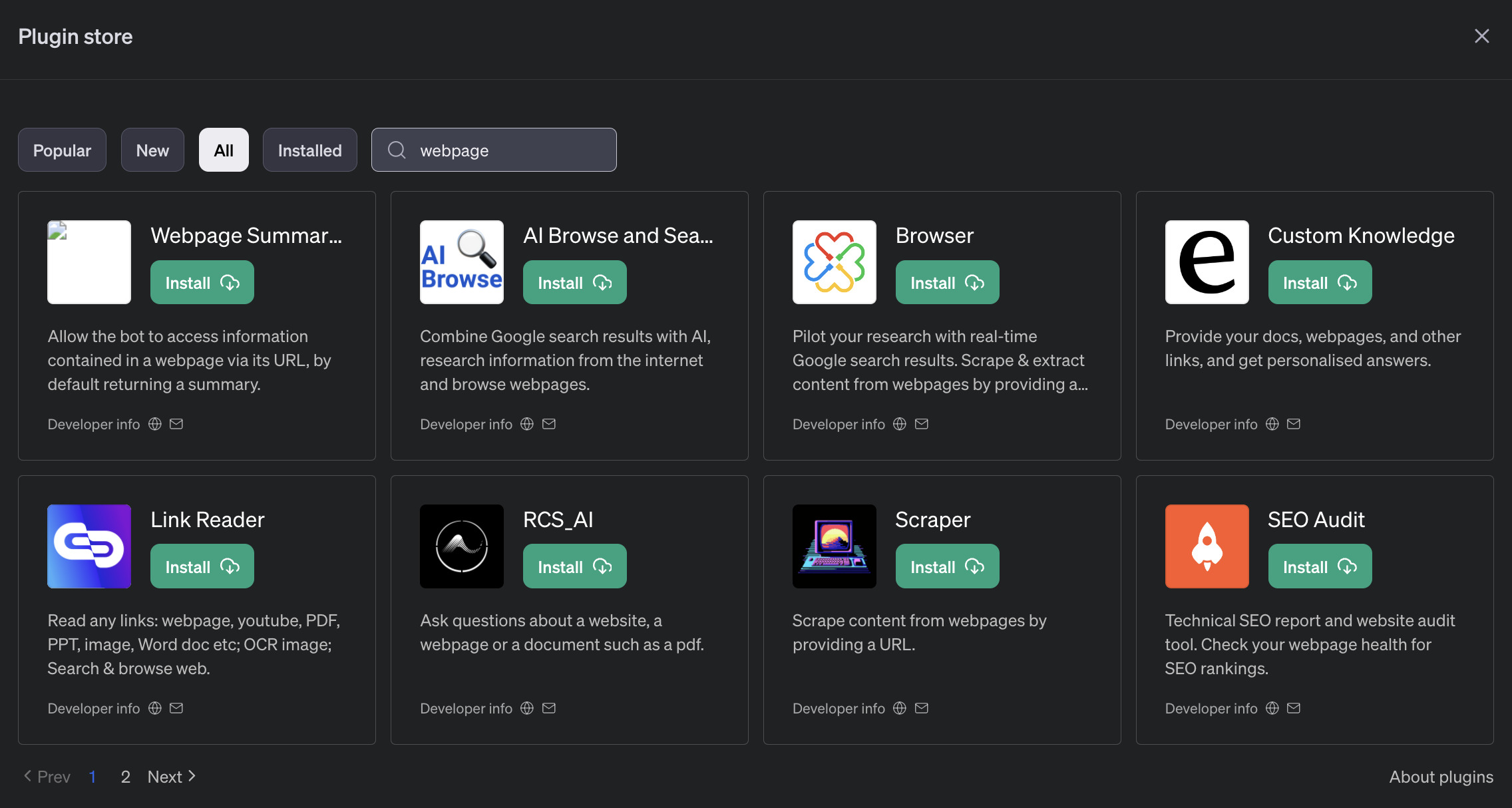

One may ask whether chatting with a webpage is useful. Going by the number of plugins on the openai plugins store, it seems the answer is: "useful enough".

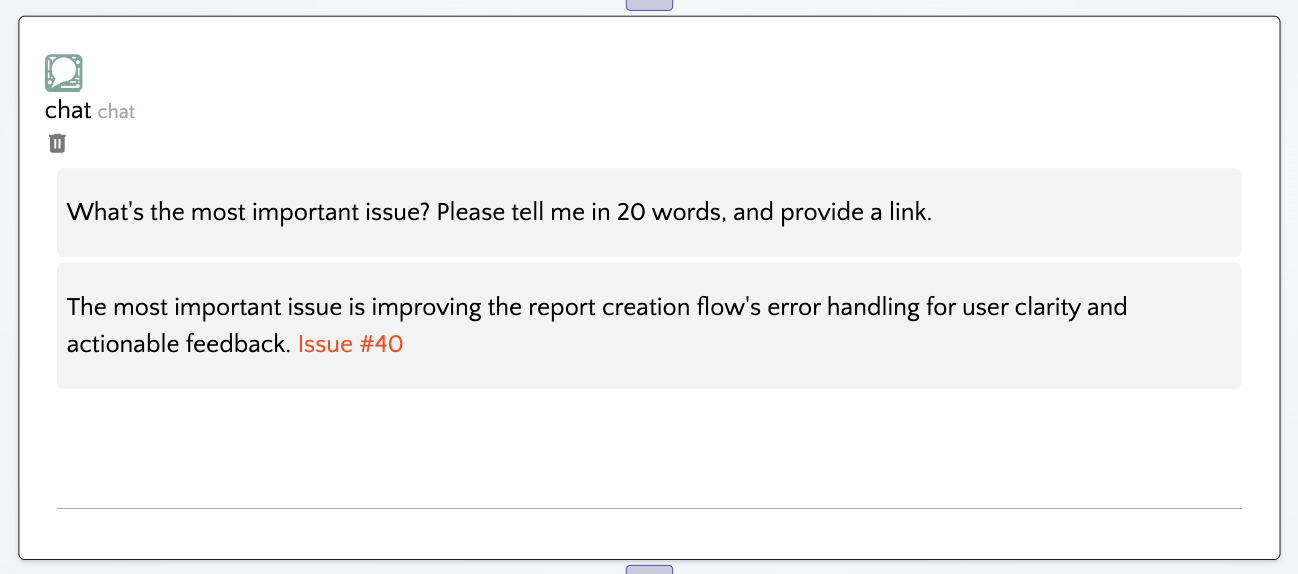

What else can we chat with? We recently introduced the python_v0 node which runs Python 3.7 on an AWS Lambda. So we wrote a small user script that fetches our open issues from Github. We plugged it into the chat node and were immediately able to chat with our Github issues.

In the chat node, i invite you to:

- Paste your OpenAI key

- Run the pipeline

- Write a question, e.g "What's the most important issue? Please tell me in 20 words, and provide a link.".

- Press enter.

Here is what we got:

Is the ability to chat with github useful? We certainly use a Slack bot just for this purpose. What if the LLM could open, edit, close tickets, or even better:

- reason about our github issues?

- help us combat duplicate issues, or duplicated work?

- provide a sense of priority to our devs?

- etc.

We then asked ourselves: "what if the LLM could traverse and act on the graph?".

So to find out, we clumsily experimented with function calling. The prompt told the chat_v0 node to believe it was a personal task manager. It had one function it could call which would simply update the markdown node.

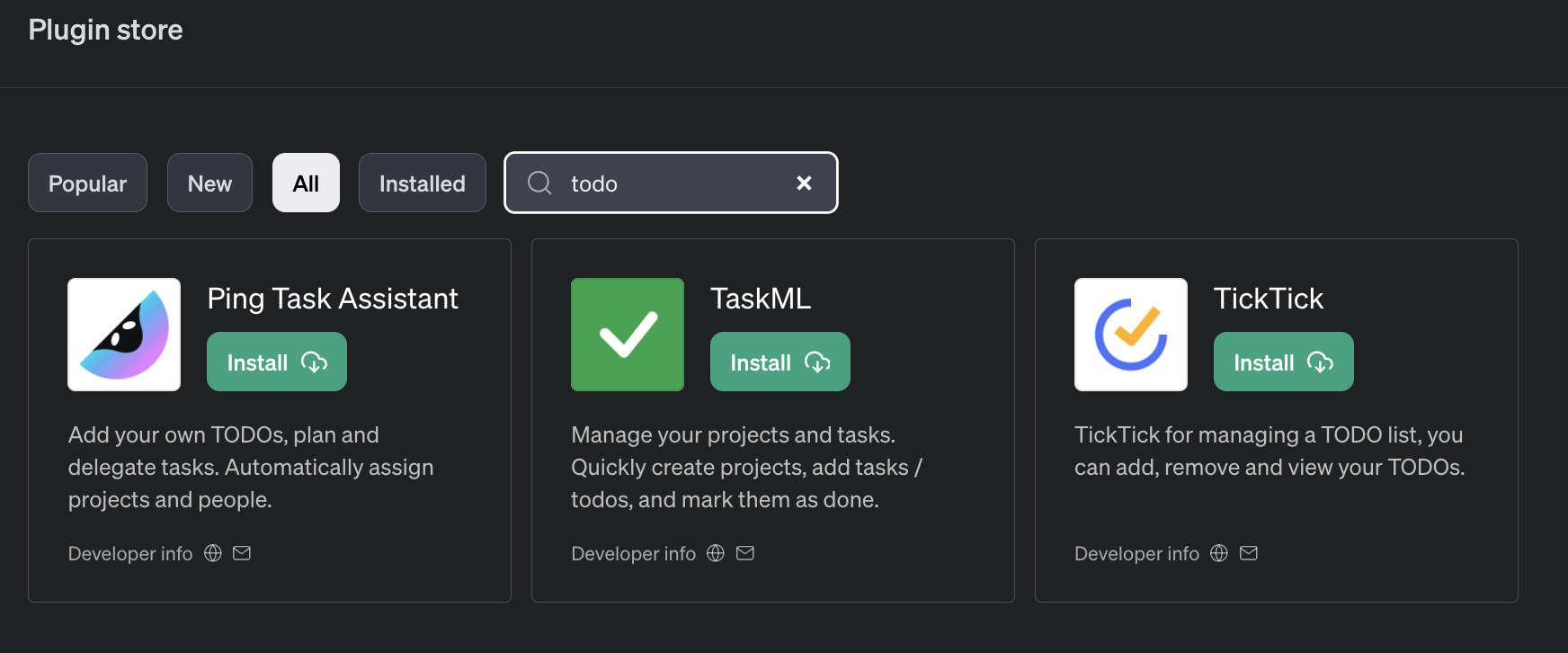

The result, as you can see above, was a rather entertaining chat-based todo manager. We once-again have to ask the question: is this useful?

If the OpenAI plugins store is anything to go by as a metric for utility: the answer is potentially, once again, yes.

Where to go from here?

Talk to the City is first and foremost a public deliberations platform. At AOI, we firmly believe that one of the most meaningful use cases for AI is sifting through public surveys on important public matters, organizing and publishing the voices of the people in an easy digest format.

Our first priority is the accuracy of the reports we produce, and their faithfulness to the voices they hope to represent. Having experimented with multi-agent platforms, e.g autogpt we are weary of full automation with extensive capabilities, and are more in favour of a slow and deliberate progression with a lot of testing (Talk to the City has - to date - 100 tests and counting)

However we will continue experimenting once in a while to see if our public deliberations platform has the potential to serve other use cases.

The pattern of having..

- data in a graph.

- capable utility nodes in a graph.

- Specialized LLM nodes in a graph.

- General LLM and chat nodes in a graph.

.. has so far enabled us to create sophisticated LLM pipelines that have served our purpose well.

It would seem the next step in terms of research on Talk to the City could be further exploration of:

- having all nodes advertise their capabilities in the form of function calling prompts.

- the ability for chat nodes or executive LLM nodes to:

- amalgamate function calling abilities for other nodes in the graph.

- traverse the graph.

- acquire data from nodes in the graph.

- call functions on nodes in the graph.

- etc.

The ability to build these mini-apps by connecting a handful of nodes in the graph seems promising. Despite our focus remaining public deliberations, we will keep on exploring, in case we discover something useful.